Flannel简介

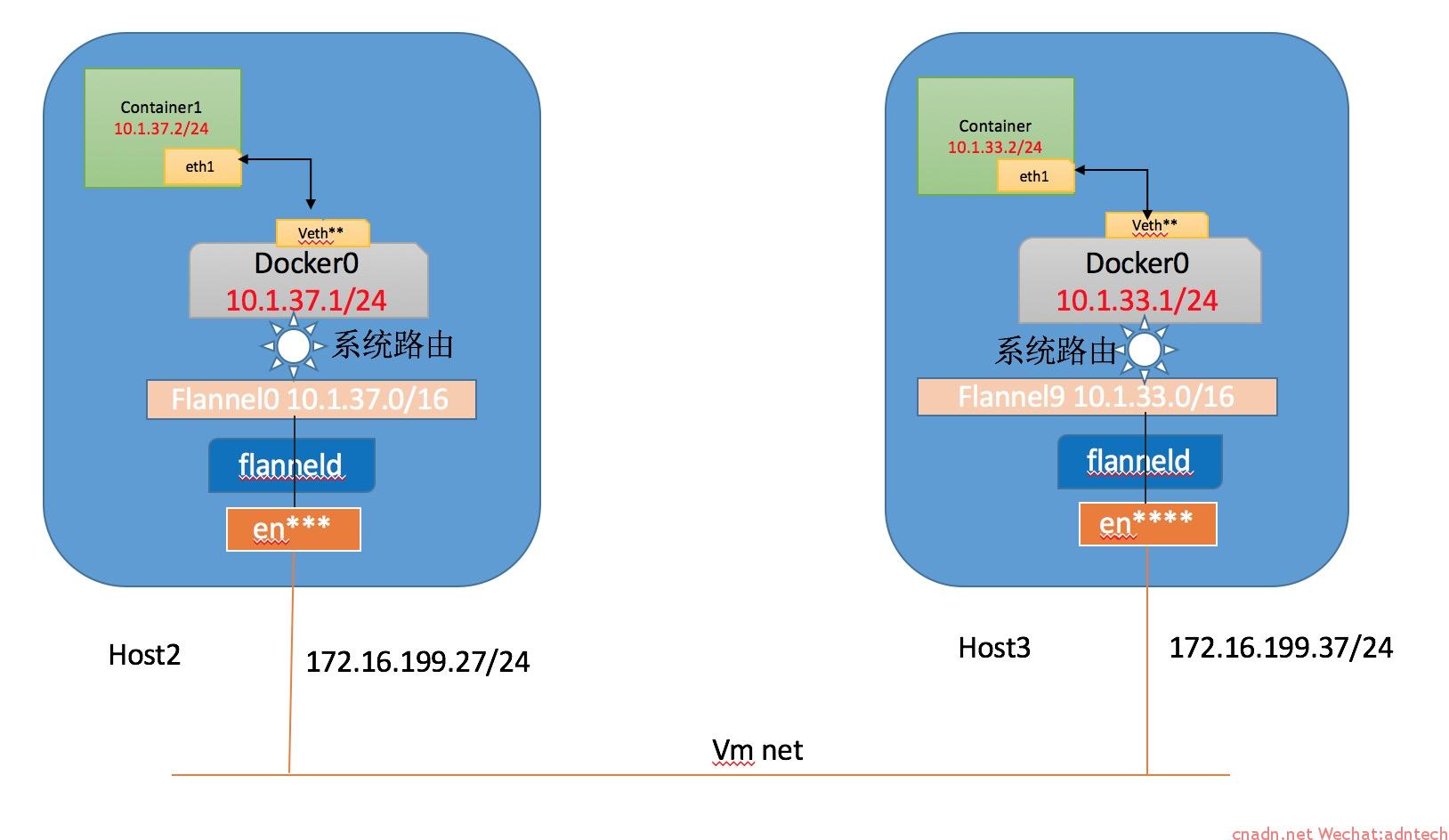

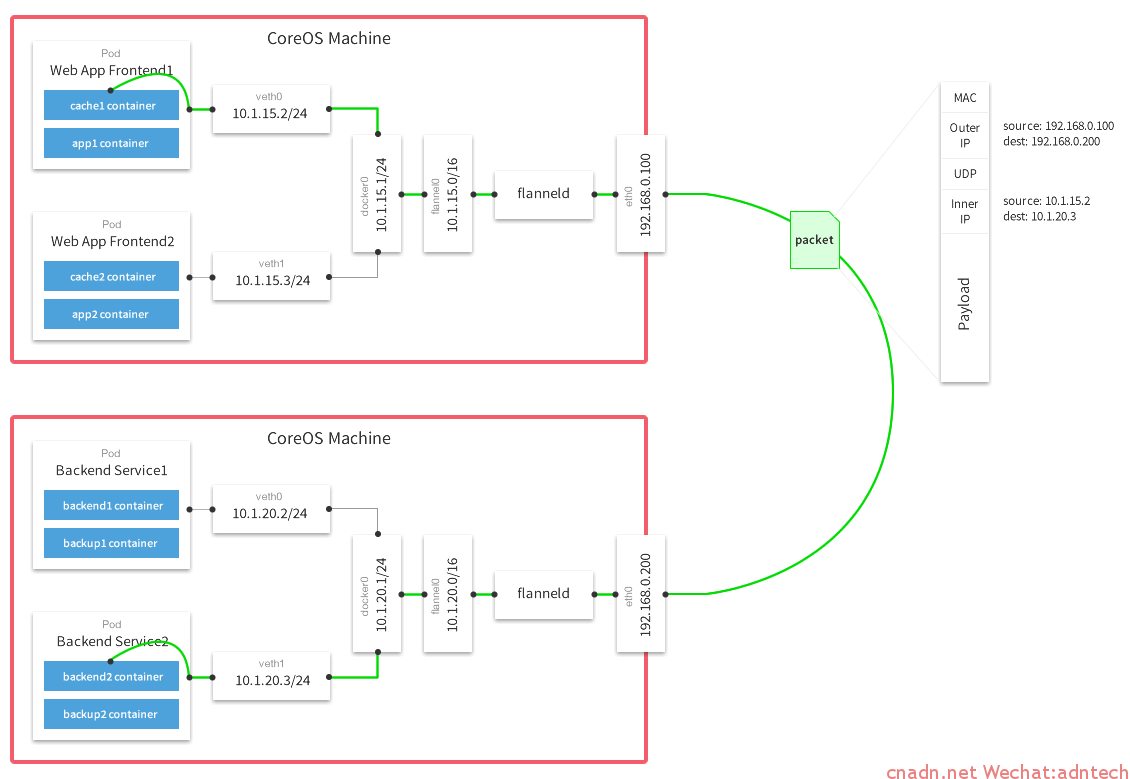

Flannel是一个开源的用于管理和组织跨主机间容器网络的一套工具,其原理是通过在所有宿主机上建立一个flannel0网卡,将所有宿主机设置为同一个大的网段,并改写每个docker0的桥IP(通过改写docker daemon启动命令)为其一个子网IP,从而实现,整体网络在一个大的网段,各个docker0分配不同的子网网段。各个docker0网络内的容器数据包最终通过flanneld进程来将数据包进行封装,通过UDP包外层封装(缺省),或者vxlan隧道,实现跨主机容器通信(主机底层物理网络只要路由可达就行),另外flannel还支持host-gw,aws,gec等网络,本实验使用缺省udp封装。

安装前提条件

系统先需正确安装etcd服务,本实验已安装好etcd三节点集群

系统先需正确安装docker服务

Flannel安装

可以到https://github.com/coreos/flannel/releases下载最新编译后版本,或者使用操作系统工具直接按照,下载编译后的版本只需将flanneld以及mk-docker-opts.sh拷贝到系统path的某个目录下,但需要手工创建相关配置文件,较为麻烦,本实验使用centos系统,故直接使用yum安装

|

1 2 |

yum update yum install flannel |

Fannel配置

安装完毕后,编辑/etc/sysconfig/flanneld 文件,设置 FLANNEL_ETCD_PREFIX如下,设置FLANNEL_OPTIONS,是为了指定让flannel通过哪个物理接口封装数据包。注意etc endpoints设置,由于本环境中etcd集群在每个节点上都有,因此使用了本地127.0.0.1地址,否则应修改为正确的etcd服务url

|

1 2 3 4 5 6 7 8 9 10 11 |

# Flanneld configuration options # etcd url location. Point this to the server where etcd runs FLANNEL_ETCD_ENDPOINTS="http://127.0.0.1:2379" # etcd config key. This is the configuration key that flannel queries # For address range assignment FLANNEL_ETCD_PREFIX="/flannel/network" # Any additional options that you want to pass FLANNEL_OPTIONS="--iface=eno50332184" |

在启动flannel之前,在etcd中设置flannel希望使用的大网络:

|

1 |

etcdctl set /flannel/network/config '{ "Network": "10.1.0.0/16" }' |

启动flannel:

|

1 |

systemctl start flanneld.service |

查看more /run/flannel/docker 文件是否被自动生成,类似:

|

1 2 3 4 5 |

[root@docker3 ~]# more /run/flannel/docker DOCKER_OPT_BIP="--bip=10.1.33.1/24" DOCKER_OPT_IPMASQ="--ip-masq=true" DOCKER_OPT_MTU="--mtu=1472" DOCKER_NETWORK_OPTIONS=" --bip=10.1.33.1/24 --ip-masq=true --mtu=1472" |

修改docker.service启动配置文件,增加After=flanneld.service以及EnvironmentFile=/run/flannel/docker,修改dockerd启动配置行为:ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

[root@docker3 ~]# cat /usr/lib/systemd/system/docker.service [Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service After=flanneld.service Wants=network-online.target [Service] Type=notify EnvironmentFile=/run/flannel/docker # the default is not to use systemd for cgroups because the delegate issues still # exists and systemd currently does not support the cgroup feature set required # for containers run by docker ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS ExecReload=/bin/kill -s HUP $MAINPID # Having non-zero Limit*s causes performance problems due to accounting overhead # in the kernel. We recommend using cgroups to do container-local accounting. LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity # Uncomment TasksMax if your systemd version supports it. # Only systemd 226 and above support this version. #TasksMax=infinity TimeoutStartSec=0 # set delegate yes so that systemd does not reset the cgroups of docker containers Delegate=yes # kill only the docker process, not all processes in the cgroup KillMode=process # restart the docker process if it exits prematurely Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target |

完成上述工作后,重启docker服务:

systemctl daemon-reload

systemctl restart docker

检查确认docker0以及flannel0网络输出,应看到docker0之前配置config时候的一个子网地址,类似如下输出:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1472 inet 10.1.33.1 netmask 255.255.255.0 broadcast 0.0.0.0 inet6 fe80::42:52ff:fee9:30d0 prefixlen 64 scopeid 0x20<link> ether 02:42:52:e9:30:d0 txqueuelen 0 (Ethernet) RX packets 222479 bytes 18552624 (17.6 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 222446 bytes 21665160 (20.6 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472 inet 10.1.33.0 netmask 255.255.0.0 destination 10.1.33.0 unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC) RX packets 226795 bytes 19050750 (18.1 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 220076 bytes 18485206 (17.6 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

设置flanneld服务开机自动启动:

[root@docker3 ~]# systemctl enable flanneld.service

上述配置安装工作应在所有节点上都完成,但是etcdctl设置 config步骤无需重复

容器互联测试

|

1 2 3 4 5 6 |

[root@docker2 ~]# docker exec busybox1 ping 10.1.33.2 PING 10.1.33.2 (10.1.33.2): 56 data bytes 64 bytes from 10.1.33.2: seq=0 ttl=60 time=0.570 ms 64 bytes from 10.1.33.2: seq=1 ttl=60 time=0.837 ms 64 bytes from 10.1.33.2: seq=2 ttl=60 time=0.891 ms 64 bytes from 10.1.33.2: seq=3 ttl=60 time=1.093 ms |

网络数据path分析

- 当在宿主机2上ping宿主机3内容器IP时候,容器本身根据自身路由将数据包发送到缺省网关即docker0上:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

[root@docker2 ~]# docker exec busybox1 ip route default via 10.1.37.1 dev eth0 10.1.37.0/24 dev eth0 src 10.1.37.2 [root@docker2 ~]# ifconfig docker0 docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1472 inet 10.1.37.1 netmask 255.255.255.0 broadcast 0.0.0.0 inet6 fe80::42:69ff:fe93:7458 prefixlen 64 scopeid 0x20<link> ether 02:42:69:93:74:58 txqueuelen 0 (Ethernet) RX packets 416092 bytes 34775496 (33.1 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 398175 bytes 38911310 (37.1 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

docker0上抓包可以看到如下包,注意此时Dst MAC是docker0的MAC:

|

1 2 3 4 |

Frame 1: 98 bytes on wire (784 bits), 98 bytes captured (784 bits) Ethernet II, Src: 02:42:0a:01:25:02 (02:42:0a:01:25:02), Dst: 02:42:69:93:74:58 (02:42:69:93:74:58) Internet Protocol Version 4, Src: 10.1.37.2, Dst: 10.1.33.2 Internet Control Message Protocol |

- docker0发现目的IP不是本网段,系统查找路由表,发现去往10.1.0.0/16网络的需要经过flannel0设备,于是将数据包发送给flannel0:

|

1 2 3 4 5 6 |

[root@docker2 ~]# ip route default via 192.168.0.1 dev eno16777736 proto static metric 100 10.1.0.0/16 dev flannel0 10.1.37.0/24 dev docker0 proto kernel scope link src 10.1.37.1 172.16.199.0/24 dev eno50332184 proto kernel scope link src 172.16.199.27 metric 100 192.168.0.0/24 dev eno16777736 proto kernel scope link src 192.168.0.183 metric 100 |

flannel0抓包可以发现,flannel将数据包的以太网包头剔除,并将源IP修改为其自己的IP地址10.1.37.0

|

1 2 3 4 |

Frame 1: 84 bytes on wire (672 bits), 84 bytes captured (672 bits) Raw packet data Internet Protocol Version 4, Src: 10.1.37.0, Dst: 10.1.33.2 Internet Control Message Protocol |

- flanneld进程捕捉到该包,在etcd中查找10.1.33.2属于哪个网络,且查找获得该子网所对应的宿主机IP地址,执行相应的包封装操作

|

1 2 3 4 5 6 7 8 |

[root@docker1 ~]# etcdctl ls /flannel/network/subnets -recursive /flannel/network/subnets/10.1.97.0-24 /flannel/network/subnets/10.1.11.0-24 /flannel/network/subnets/10.1.37.0-24 /flannel/network/subnets/10.1.33.0-24 [root@docker1 ~]# etcdctl get /flannel/network/subnets/10.1.33.0-24 {"PublicIP":"172.16.199.37"} |

在物理接口上抓包可以看到, flanneld将flannel0上看到的包作为UDP payload,UDP包头使用8285端口,三层再封装宿主机IP,并通过宿主机自身以太网地址封装源和目的mac

|

1 2 3 4 5 6 |

Frame 1: 126 bytes on wire (1008 bits), 126 bytes captured (1008 bits) Ethernet II, Src: Vmware_ae:11:8d (00:0c:29:ae:11:8d), Dst: Vmware_7d:f5:be (00:0c:29:7d:f5:be) Internet Protocol Version 4, Src: 172.16.199.27, Dst: 172.16.199.37 User Datagram Protocol, Src Port: 8285, Dst Port: 8285 Internet Protocol Version 4, Src: 10.1.37.0, Dst: 10.1.33.2 Internet Control Message Protocol |

数据path图:

附,官方github上的图:

附2:

在刚配置完flannel后发现无法ping通外部容器,排错发现数据包到目的主机的flannel0后没有被发送到docker0上,主要是由于forward chain中ACTION为 DOCKER的规则阻断了数据包进入docker0,ping不通时候的的iptable类似如下:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

Chain INPUT (policy ACCEPT 175K packets, 15M bytes) pkts bytes target prot opt in out source destination Chain FORWARD (policy DROP 3161 packets, 266K bytes) pkts bytes target prot opt in out source destination 14904 1252K DOCKER-ISOLATION all -- * * 0.0.0.0/0 0.0.0.0/0 876 73584 ACCEPT all -- * docker0 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED 3161 266K DOCKER all -- * docker0 0.0.0.0/0 0.0.0.0/0 10867 913K ACCEPT all -- docker0 !docker0 0.0.0.0/0 0.0.0.0/0 0 0 ACCEPT all -- docker0 docker0 0.0.0.0/0 0.0.0.0/0 Chain OUTPUT (policy ACCEPT 182K packets, 16M bytes) pkts bytes target prot opt in out source destination Chain DOCKER (1 references) pkts bytes target prot opt in out source destination Chain DOCKER-ISOLATION (1 references) pkts bytes target prot opt in out source destination 14904 1252K RETURN all -- * * 0.0.0.0/0 0.0.0.0/0 |

可以看到上述规则中FORWARD中的规则:

|

1 |

3161 266K DOCKER all -- * docker0 0.0.0.0/0 0.0.0.0/0 |

该规则将数据包交给DOCKER chain处理,而docker chain没有放行,就是说,缺省情况下docker service是不容许docker0外部数据经过docker0进入docker内部,所以在docker chain中增加规则:

|

1 |

iptables -I DOCKER -s 0.0.0.0/0 -d 0.0.0.0/0 ! -i docker0 -o docker0 -j ACCEPT |

但是重启后,由于docker服务自身会刷新该规则,会导致增加的丢失, 所以直接增加到FORWARD chain中

|

1 |

iptables -I FORWARD -s 0.0.0.0/0 -d 0.0.0.0/0 ! -i docker0 -o docker0 -j ACCEPT |

检查输出:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

[root@docker3 ~]# iptables -nL -v Chain INPUT (policy ACCEPT 4059 packets, 345K bytes) pkts bytes target prot opt in out source destination Chain FORWARD (policy DROP 0 packets, 0 bytes) pkts bytes target prot opt in out source destination 150 12600 DOCKER-USER all -- * * 0.0.0.0/0 0.0.0.0/0 150 12600 DOCKER-ISOLATION all -- * * 0.0.0.0/0 0.0.0.0/0 75 6300 ACCEPT all -- * docker0 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED 0 0 DOCKER all -- * docker0 0.0.0.0/0 0.0.0.0/0 75 6300 ACCEPT all -- docker0 !docker0 0.0.0.0/0 0.0.0.0/0 0 0 ACCEPT all -- docker0 docker0 0.0.0.0/0 0.0.0.0/0 0 0 ACCEPT all -- !docker0 docker0 0.0.0.0/0 0.0.0.0/0 Chain OUTPUT (policy ACCEPT 3881 packets, 340K bytes) pkts bytes target prot opt in out source destination Chain DOCKER (1 references) pkts bytes target prot opt in out source destination Chain DOCKER-ISOLATION (1 references) pkts bytes target prot opt in out source destination 150 12600 RETURN all -- * * 0.0.0.0/0 0.0.0.0/0 Chain DOCKER-USER (1 references) pkts bytes target prot opt in out source destination 150 12600 RETURN all -- * * 0.0.0.0/0 0.0.0.0/0 |

随后可以ping通

如果想暴力的放行数据,可以执行:

|

1 2 3 4 5 6 |

[root@docker3 ~]# iptables -F [root@docker3 ~]# iptables -X [root@docker3 ~]# iptables -Z [root@docker3 ~]# iptables -P INPUT ACCEPT [root@docker3 ~]# iptables -P OUTPUT ACCEPT [root@docker3 ~]# iptables -P FORWARD ACCEPT |

iptable里的规则在容器网络功能里来说是比较重要的,有些规则是为了隔离网络实现安全,如果简单的全部删除docker自身添加的规则有可能会导致问题,需要仔细处理。

附3:将firewalld服务改为iptables service服务:

systemctl stop firewalld

systemctl disable firewalld

yum install iptable-services

强制在机器启动时候在forward 链中增加一条全转发规则:

- 写一个脚本

1234[root@docker3 ~]# cat /etc/sysconfig/add-forward-iptable-rule.sh#!/bin/bashsleep 10iptables -I FORWARD -s 0.0.0.0/0 -d 0.0.0.0/0 -j ACCEPT - 在systemd中增加一个service并开机启动

12345678[root@docker3 ~]# cat /usr/lib/systemd/system/add-iptable-rule.service[Unit]Description=enable forward all for forward chain in filter tableAfter=network.target[Service]ExecStart=/bin/bash /etc/sysconfig/add-forward-iptable-rule.sh[Install]WantedBy=multi-user.target

12[root@docker3 sysconfig]# systemctl enable add-iptable-rule.serviceCreated symlink from /etc/systemd/system/multi-user.target.wants/add-iptable-rule.service to /usr/lib/systemd/system/add-iptable-rule.service.

文章评论